< Contact the CTL >

Please feel free to contact the CTL with feedback, questions, and suggested resources around generative AI for teaching and learning.

"5 Things to Know About ChatGPT"

For a quick overview of a Generative AI tool commonly used in higher education, see this CTL resource that was initially created at the launch of ChatGPT and is updated periodically with current resources.

Policies

The following list represents a basic primer for the acceptable use of generative AI tools at UT-Austin (updated November 2023).

Academic Honesty The use of generative AI tools to create responses to course assignments in a way that is unacceptable to the course instructor may be considered a case of academic dishonesty by the university. Contact the Student Conduct and Academic Integrity office at UT with any concerns about student work. | “It is strongly recommended that you outline any individual expectations for assignments completion, including parameters around group work, authorized resources, citation requirements, etc. in the assignment directions. Clear and detailed expectations not only reduce the likelihood of a possible violation, but they also aid the Student Conduct team in holding students accountable that fail to adhere to the assignment directions.” “Your Syllabus at UT-Austin” (https://provost.utexas.edu/aps-faculty-archive/your-syllabus-at-ut-austin/)

|

Pedagogical/Course Requirements As much as is possible, faculty and instructors need to signal their intention to use generative AI tools as "course materials," especially in cases where any kind of financial obligations are incumbent upon the student, and carefully weigh the costs and benefits of using these tools. Please stay up to date on policies around this evolving area. | "All required course materials – textbooks, course packets, access codes, iClickers, school supply items, etc. – must be submitted to the Co-op. If an item is unavailable at the Co-op, it will still be listed. For courses without any required materials, course material information still needs to be reported by flagging “No Text Required.” Courses that include and distribute textual materials that are included in the program costs (such as Option II and III programs) must also provide this information. … Even though these materials do not need to be purchased by either the Co-op or by students, state legislation requires that we communicate all required course materials to students. Pursuant to this legislation, students must be able to view courses that require only OER. To make this possible, instructors must report all course materials adoptions, regardless of cost." "Textbook Affordability & Open Educational Resources" https://provost.utexas.edu/finance-reporting-compliance/textbook-affordability-open-educational-resources/ |

Information Technology and Data Policies As a kind of information technology, the university has defined acceptable use of generative AI tools. | “ChatGPT or similar AI Tools must not be used to generate output that would be considered non-public. Examples include but are not limited to generating proprietary or unpublished research; legal analysis or advice; recruitment, personnel or disciplinary decision making; completion of academic work in a manner not allowed by the instructor; creation of non-public instructional materials; and grading.”

“Acceptable Use of ChatGPT and Similar AI Tools:” https://security.utexas.edu/ai-tools |

While it is essential to chronicle the benefits and challenges student use of generative AI tools, it is equally essential to consider how these tools impact our institutional environments.

The US Office of Educational Technology's report, "Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations" (2023), should be compulsory reading for broadly considering the ethics of using AI in the classroom. Overarching questions for this report include the following:

- What is our collective vision of a desirable and achievable educational system that leverages automation to advance learning while protecting and centering human agency?

- How and on what timeline will we be ready with necessary guidelines and guardrails, as well as convincing evidence of positive impacts, so that constituents can ethically and equitably implement this vision widely?

The following recommendations are culled from the OET report.

1. Guiding questions for considering the ethical adoption of generative AI tools in teaching and learning contexts

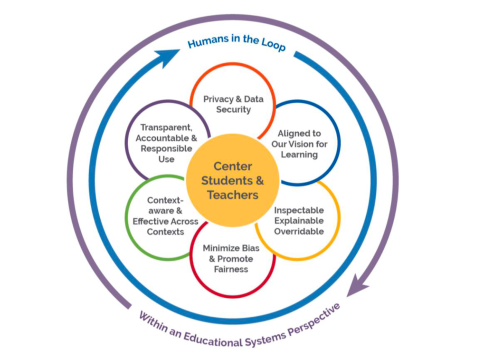

From a systemic perspective, the following image (below) represents the ecosystem of concerns that can inform decision-makers about the benefits and challenges of adopting generative AI tools into the education landscape.

- Alignment of the AI Model to Educators’ Vision for Learning: When choosing to use AI in educational systems, decision makers prioritize educational goals, the fit to all we know about how people learn, and alignment to evidence-based best practices in education.

- Data Privacy: Ensuring security and privacy of student, teacher, and other human data in AI systems is essential.

- Notice and Explanation: Educators can inspect educational technology to determine whether and how AI is being incorporated within systems. Educators’ push for AI models can explain the basis for detecting patterns and/or for making recommendations, and people retain control over these suggestions.

- Algorithmic Discrimination Protections: Developers and implementers of AI in education take strong steps to minimizing bias and promoting fairness in AI models.

- Safe and Effective Systems: The use of AI models in education is based on evidence of efficacy (using standards already established in education for this purpose) and work for diverse learners and in varied educational settings.

- Human Alternatives, Consideration and Feedback: AI models that support transparent, accountable, and responsible use of AI in education by involving humans in the loop to ensure that educational values and principles are prioritized.

2. Guiding questions for considering the value of generative AI tools for instructors:

- Is AI improving the quality of an educator’s day-to-day work? Are teachers experiencing less burden and more ability to focus and effectively teach their students?

- As AI reduces one type of teaching burden, are we preventing new responsibilities or additional workloads being shifted and assigned to teachers in a manner that negates the potential benefits of AI?

- Is classroom AI use providing teachers with more detailed insights into their students and their strengths while protecting their privacy?

- Do teachers have oversight of AI systems used with their learners? Are they exercising control in the use of AI-enabled tools and systems appropriately or inappropriately yielding decision-making to these systems and tools?

- When AI systems are being used to support teachers or to enhance instruction, are the protections against surveillance adequate?

- To what extent are teachers able to exercise voice and decision-making to improve equity, reduce bias, and increase cultural responsiveness in the use of AI-enabled tools and systems?

References

Bankhwal, M., Bisht, A., Chui, M., Roberts, R., Van Heteren, A., & McKinsey Digital. (2024). AI for social good: Improving lives and protecting the planet.

Ferrara, Emilio, GenAI Against Humanity: Nefarious Applications of Generative Artificial Intelligence and Large Language Models (October 26, 2023). Available at SSRN: https://ssrn.com/abstract=4614223 or http://dx.doi.org/10.2139/ssrn.4614223

U.S. Department of Education, Office of Educational Technology, Artificial Intelligence and Future of Teaching and Learning: Insights and Recommendations, Washington, DC, 2023.